As time passes, websites and applications will face unprecedented traffic volumes. With the rise of AI-powered tools, cloud-native applications, IoT ecosystems, and remote-first workforces, maintaining seamless digital experiences has become a concern. Network Load Balancing is an important strategy for managing traffic in today’s IT systems.

So, what is network load balancing? NLB distributes incoming network traffic across multiple servers, preventing overload, improving reliability, and accelerating response times. This in-depth guide will help you understand:

- What is Network Load Balancing and how it works

- Different types of network load balancers and algorithms

- Best practices and benefits of network load balancing

- Popular load balancing tools and platforms

- Future trends shaping NLB

Whether it is a SaaS platform, a high-traffic eCommerce store, or a live video streaming service, users expect instant responsiveness, minimal downtime, and consistent performance. Organizations must ensure their infrastructure is resilient, scalable, and fault-tolerant to meet these high expectations. That is where Network Load Balancing (NLB) comes in. Let us get started with the detailed guide.

What Is Network Load Balancing? Everything You Need to Know

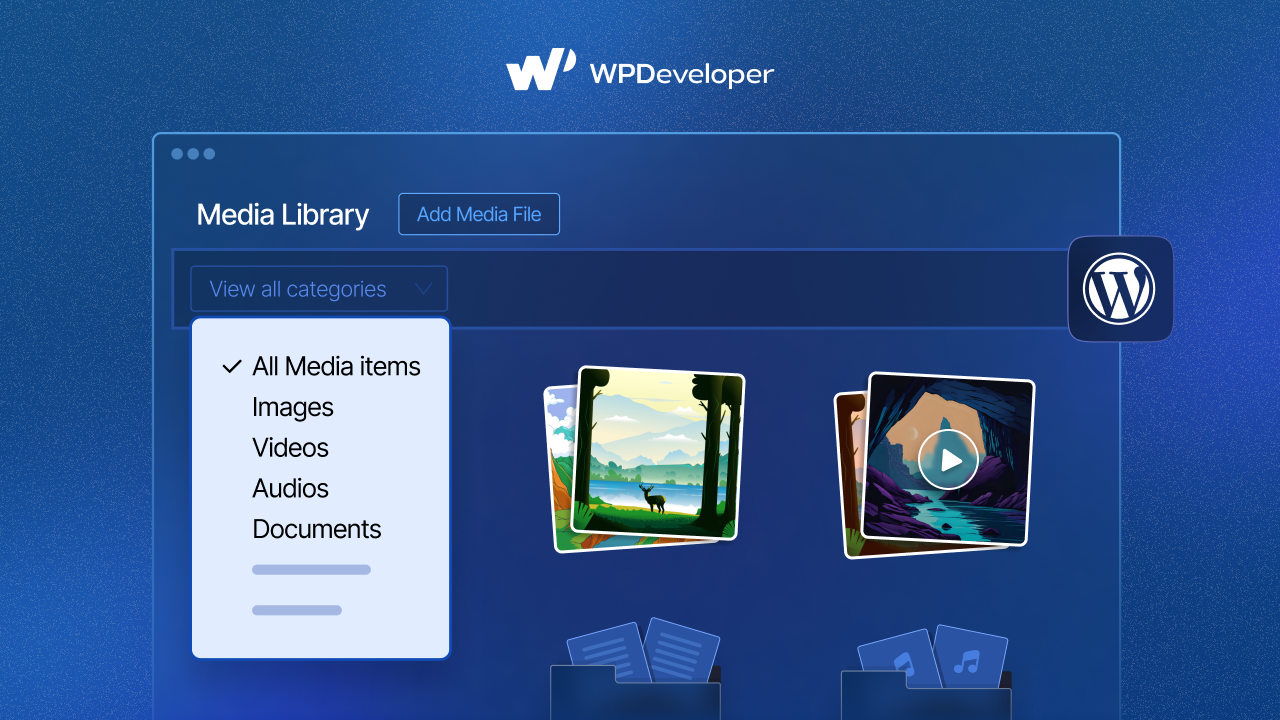

Network Load Balancing (NLB) spreads incoming traffic across multiple servers so that no single server gets overloaded. It works at the transport layer (Layer 4) of the OSI model, which means it manages data sent through common connections like TCP, UDP, and FTP. This helps websites and apps stay fast and available, even when there is a lot of traffic.

From websites and applications to gaming servers and IoT platforms, NLB is used in a variety of digital ecosystems. Unlike Application Load Balancing (which routes based on URL or session data), NLB focuses on IP addresses and ports. By intelligently distributing traffic, NLB ensures,

👉 No single server is overwhelmed

👉 Performance remains high

👉 Infrastructure becomes fault-tolerant

Network Load Balancing vs. Application Load Balancing

In real-world scenarios, NLB and ALB are often used together, especially in microservice architectures. Here is the difference between NLB and ALB,

| Feature | Network Load Balancing (NLB) | Application Load Balancing (ALB) |

| OSI Layer | Layer 4 (Transport) | Layer 7 (Application) |

| Traffic Type | TCP/UDP | HTTP/HTTPS |

| Decision Criteria | IP address, port | URL path, headers, cookies |

| Performance | Low latency, fast routing | Content-aware, better for APIs |

| Use Case | Gaming, video streaming | Microservices, REST APIs, websites |

Why Network Load Balancing Is Important in 2025?

Today’s fast-moving digital world requires websites and online services to perform flawlessly. Even during sudden traffic spikes or heavy usage, users expect instant access, smooth performance, and zero downtime. Whether they are shopping online, streaming content, or using AI-powered apps, they expect a faster experience.

Network Load Balancing helps distribute the flow of internet traffic across multiple servers to keep everything running smoothly and reliably. Let us break down why this matters more than ever today.

✅ Real-Time Interactions Are the Norm

From chatting with AI assistants to watching live sports and playing multiplayer games, users expect everything to work instantly. Even a few seconds of delay can frustrate users and push them toward competitors. Network Load Balancing helps by directing users to the server that can respond the fastest, keeping things running in real time.

✅ Cloud-Native & Multi-Region Deployments

Modern businesses no longer host their websites or apps on just one server in one place. They often use cloud services like AWS, Azure, or Google Cloud and spread their apps across different regions of the world. This makes things faster for users, no matter where they are, but it also makes traffic routing more complicated. NLB steps in to handle this complexity by sending each user’s request to the best available server based on speed, location, and availability.

✅ IoT And Edge Computing

From watches that monitor your health to connected cars and home assistants, smart devices are everywhere now. These devices often send and receive data constantly. Instead of routing all this data to a central server, edge computing allows processing to happen closer to where the data is generated. NLB plays a key role here by deciding where to send that data, either to a central data center or to a nearby edge server. This ensures faster response and lower delays.

✅ AI Workloads And GPU Clusters

AI is at the heart of many applications today, whether it is for recommendations, image recognition, or automated decisions. Running AI models requires powerful computers (often using GPUs) working together. NLB helps distribute incoming AI tasks across these computing resources efficiently, so no single machine gets overwhelmed, and the system can process tasks in parallel. This keeps performance fast and stable, even under heavy loads.

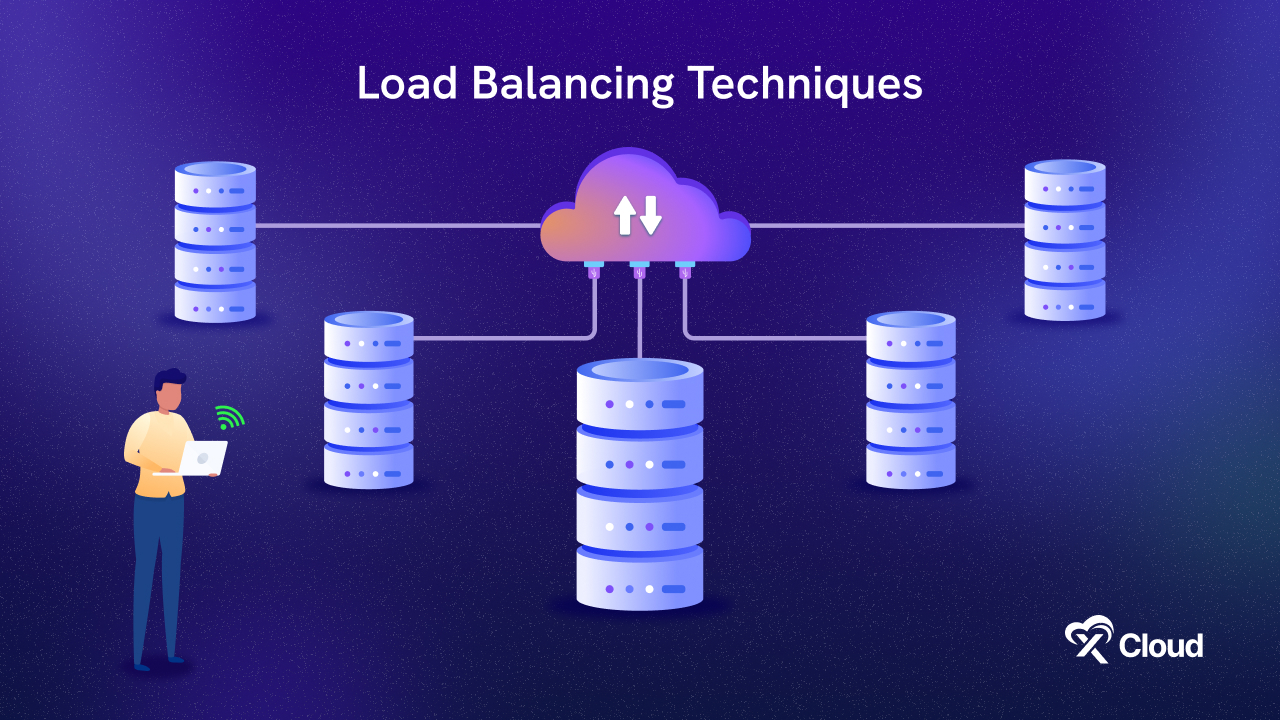

How Network Load Balancer Works: A Beginner-Friendly Guide

Imagine you are calling a customer support hotline. When you dial the number, you do not get connected directly to a support agent. Instead, your call goes to an automated system (the load balancer). It quickly checks which agent is available, has the shortest queue, or is best equipped to handle your issue. Then, it routes your call to that agent so you get help as efficiently as possible.

That is how a network load balancer works. It receives incoming traffic and smartly directs it to the best server to handle the request, ensuring fast and reliable service.

Whenever a user, whether it is a person using a browser, a mobile app, or even a smart device, sends a request to access a website or online service, here is what happens step-by-step:

- A user sends a request: This could be as simple as opening a website, clicking a button on a mobile app, or a smart device sending data to the cloud.

- The request hits a public IP address: Every online service has an IP address. But in this case, it is not pointing to a single server, it points to a load balancer.

- Load balancer receives the request: Think of the load balancer as a smart traffic cop. It does not process the request itself—it decides where to send it.

- It checks server health and availability: The load balancer regularly monitors all the servers in its pool. If one server is down or overloaded, it will not send any new requests to it.

- It chooses the best server using a specific rule (algorithm): Based on the chosen load balancing algorithm (explained below), the request is routed to the most suitable server.

- The chosen server handles the request: This server processes the user’s request (like loading a web page or retrieving data) and sends back a response.

- The response may be routed back through the load balancer: Some systems route the server’s response through the load balancer for security or consistency, while others go directly back to the user.

How Load Balancers Decide Where to Send Traffic?

Once a load balancer receives a request, its next job is to figure out which server should handle it. But it does not make that decision randomly. There are smart strategies behind it, known as load balancing algorithms. Think of these as rules the balancer follows to make sure traffic flows smoothly and servers do not get overwhelmed. Let us break down a few of the most popular strategies in everyday terms:

1. Round Robin – The Equal Turns Rule

Imagine a group of customer service agents sitting in a row. As each call comes in, it is handed to the next agent in line, one by one, then it loops back to the first. That is Round Robin. It is simple. Every server gets a turn, regardless of how busy or powerful it is. This works well when all servers are similar and requests are light or short-lived.

2. Least Connections – The Least Busy Server

Now, picture a checkout line at the grocery store. You would naturally go to the cashier with the shortest line, right? That is exactly what this algorithm does. It monitors how many active sessions each server has, and directs new traffic to the one with the fewest connections. It is great when users stay connected for different amounts of time, like in video calls or chat apps.

3. IP Hash – Stick with What You Know

This one is all about consistency. It takes a part of the user’s IP address and uses it like a fingerprint to assign them to the same server each time. That way, returning users land where they left off. This is ideal for applications that store session data or need users to stay “glued” to one server (like logged-in dashboards or shopping carts).

4. Weighted Round Robin – Stronger Servers Get More Work

Back to our call center example—but now, some agents are faster and more skilled than others. You would not want to give them the same number of calls as the newbies, right? Weighted Round Robin accounts for that. It assigns more traffic to more capable servers. For instance, if Server A is twice as powerful as Server B, it might handle two requests for every one that B gets. It is smart load distribution with performance in mind.

Using these techniques, Network Load Balancing ensures that your app or website remains fast, stable, and ready for anything, from 10 users to 10 million. So, when to use which load balancing algorithm? The right algorithm depends on your application’s needs:

👉 For fairness or equal-sized tasks, Round Robin works well.

👉 When users stay connected for long sessions, Least Connections is smarter.

👉 If users must stay with the same server, IP Hash ensures “stickiness.”

👉 For servers with mixed capabilities, Weighted Round Robin balances based on server strength.

5 Types of Load Balancing You Should Know

Load balancing is not a one-size-fits-all solution. There are different types depending on your infrastructure, traffic scale, and goals. Here is a breakdown of the most common types of load balancing, explained simply.

✅ Hardware Load Balancers

These are dedicated physical devices that sit between your users and servers, directing traffic with very high reliability and speed. Think of them as industrial-grade traffic controllers built specifically for handling enormous volumes. They are popular in large enterprises where performance and uptime are critical, but they come with a hefty price tag and limited flexibility compared to software-based solutions.

✅ Software Load Balancers

Instead of using physical hardware, software load balancers like NGINX, HAProxy, or Traefik run on standard servers or virtual machines. They do the same job, distributing incoming traffic, but are more flexible, scalable, and cost-effective. Many are open-source, making them a popular choice for developers and cloud-native environments.

✅ DNS Load Balancing

This type of load balancing happens at the Domain Name System (DNS) level. It can direct users to different IP addresses based on round-robin rotation or even their geographic location. While it is lightweight and simple to set up, DNS load balancing has limitations, like slower failover and caching issues, which can delay how quickly users are redirected when a server goes down.

✅ Cloud-Based Load Balancers

Major cloud providers like AWS Elastic Load Balancing, Azure Load Balancer, Google Cloud Load Balancing, and DigitalOcean Load Balancer offer fully managed load balancing as part of their services. These are powerful options that integrate deeply with cloud tools, automatically scale with traffic, and come with built-in analytics, security and failover features. It is perfect for modern cloud-native apps.

✅ Global Server Load Balancing (GSLB)

GSLB takes things to the next level by routing traffic across multiple regions around the world. It makes intelligent decisions based on latency, current server load, or the user’s location to deliver the best experience. This is essential for global applications where you want users in Europe, Asia, and North America all to connect to the closest and fastest server.

Each type of load balancing serves a different purpose. Whether you are running a small web app or a globally distributed platform, choosing the right kind helps ensure fast, reliable, and resilient service for your users.

What Are the Benefits of Network Load Balancing?

Network Load Balancing (NLB) is more than just a technical optimization. It is a foundational component of modern digital infrastructure. Whether you are running a personal blog or a high-traffic enterprise application, here is how NLB can significantly enhance your system’s performance and reliability:

✅ Scalability That Grows

As your application gains more users or experiences traffic spikes—say during a product launch or holiday sale, load balancers make it easy to scale. You can add more servers to the pool, and the load balancer will automatically start distributing traffic across them. Likewise, when traffic drops, you can remove servers without disrupting the user experience. This dynamic scaling ensures you are always using just the right amount of resources.

✅ Built-In Redundancy for Failures

If one server crashes or becomes unresponsive, a load balancer can instantly redirect traffic to the next available healthy server. This redundancy ensures that a single point of failure does not take your entire app or site offline. It is like having a safety net that catches issues before they reach your users.

✅ High Availability, Always On

High availability means your services stay online, even in the face of hardware failures or sudden traffic surges. Load balancing helps achieve this by distributing requests in a way that avoids overloading any single server, while also constantly monitoring server health and removing problematic ones from rotation.

✅ Improved Performance And Faster Response Times

A load balancer does not just split traffic—it makes smart decisions. By analyzing current server load, it can route requests to the server that is least busy or closest to the user, reducing wait times and improving overall responsiveness. This is especially important for real-time apps, eCommerce platforms, or anything where speed matters.

✅ Cost Efficiency Through Optimized Resource Use

Without load balancing, you would need to over-provision servers just to prepare for peak traffic, which can be expensive. Load balancing lets you use your existing resources more efficiently, reducing waste and lowering infrastructure costs. You can get more performance out of fewer servers.

✅ Enhanced Security And Isolation

Many load balancers can be configured as the first line of defense between the internet and your backend servers. They help hide internal server details, absorb certain types of attacks, and even enforce access control or SSL termination. This adds an extra layer of security and helps reduce exposure to threats.

Real-World Use Cases of Network Load Balancing in 2025

Network Load Balancing is not just a backend concept; it plays a critical role in powering the apps and services millions rely on every day. From artificial intelligence to online shopping and education, here is how NLB is applied in the real world in 2025:

1. AI SaaS Platforms

Today’s AI-powered services like ChatGPT, GitHub Copilot, or image generators such as Midjourney handle thousands, sometimes millions, of user queries at the same time. These platforms run on clusters of powerful GPU servers that perform complex computations. Network Load Balancing ensures that incoming requests are smartly distributed across these clusters, avoiding overload and making sure each user gets fast, uninterrupted responses.

2. Healthcare IoT Systems

In modern hospitals and clinics, smart medical devices continuously collect patient data, like heart rate monitors, insulin pumps, and wearable trackers. This sensitive data is transmitted in real-time to processing systems or cloud-based diagnostic tools. Any delay or failure can have life-threatening consequences. Load balancing makes sure that this critical information flows smoothly and is processed without interruption, even if one server or data center goes down.

3. eCommerce Giants

During peak times—think Black Friday, Cyber Monday, or Singles’ Day eCommerce platforms like Amazon or Alibaba experience explosive traffic. These companies rely on NLB to handle millions of transactions per minute without slowing down or crashing. The load balancer automatically directs each user request to the nearest or least busy server, allowing the system to scale instantly and maintain a smooth shopping experience.

4. Video Game Servers

In online multiplayer games like Fortnite, Call of Duty, or PUBG, players expect real-time responsiveness. To keep things fair and lag-free, these games run regional servers around the world. Load balancers distribute players based on their location and current server loads, ensuring quick matchmaking, low latency, and stable connections throughout the game. Without it, game lobbies would lag, crash, or time out frequently.

5. EdTech & Online Exams

As remote learning and online testing become the norm, platforms like Coursera, Udemy, or online exam systems need to guarantee performance and reliability. A single outage could mean a failed test or lost coursework. Load balancing distributes student connections across available resources, helping maintain smooth video streams, consistent performance, and secure exam sessions even during traffic spikes, like during final exams or major certification launches.

Popular Load Balancing Tools in 2025

Organizations now have a wide range of load balancing tools to choose from. It ranges from cloud-native services to open-source solutions. Each tool comes with its strengths and is suited for different infrastructure needs. Let us explore the most widely used options,

1. AWS Elastic Load Balancing (ELB)

Amazon Web Services offers a robust suite of load balancers under its ELB family:

- Network Load Balancer (NLB) for ultra-low latency at Layer 4 (TCP/UDP).

- Application Load Balancer (ALB) for Layer 7 (HTTP/HTTPS) with smart content-based routing.

- Gateway Load Balancer for deploying third-party virtual appliances like firewalls or packet inspection tools.

It tightly integrates with other AWS services like EC2, Lambda, and ECS, and supports automatic scaling, health checks, and high availability across multiple Availability Zones. Perfect for cloud-native apps on AWS.

2. Azure Load Balancer

Microsoft Azure provides a highly performant Layer 4 load balancer that distributes traffic efficiently across virtual machines (VMs) and containers.

- Offers low latency and high throughput.

- Seamlessly works with Virtual Networks, Azure Firewall, and Autoscale Groups.

- Includes both internal and public load balancing options, making it suitable for internet-facing and private applications alike.

3. Google Cloud Load Balancing

Google Cloud’s load balancer stands out for its global reach and smart routing capabilities.

- Handles traffic across multiple regions with intelligent routing based on latency, geography, and backend load.

- Deep integration with Google Kubernetes Engine (GKE) makes it an excellent choice for containerized applications.

- Supports automatic scaling and SSL offloading to boost performance and security.

4. NGINX

A favorite in the DevOps world, NGINX is an open-source, high-performance solution that works as both a reverse proxy and load balancer.

- Supports HTTP, HTTPS, TCP, and UDP load balancing.

- Ideal for microservices architectures and Kubernetes ingress controllers.

- Highly customizable and lightweight, making it great for DIY cloud or on-prem deployments.

You can also use NGINX Plus, the commercial version, for advanced features like session persistence, live activity monitoring, and API gateway capabilities.

5. HAProxy

HAProxy (High Availability Proxy) is another powerful open-source tool that is widely trusted by enterprises.

- Supports both Layer 4 (TCP) and Layer 7 (HTTP) load balancing.

- Known for its speed, reliability, and fine-grained configuration options.

- Commonly used in hybrid cloud environments and on-premise data centers, especially when performance and control are top priorities.

HAProxy also offers an enterprise edition with advanced observability tools and a graphical interface. Whether you are building apps in the cloud, running a Kubernetes cluster, or maintaining a hybrid infrastructure, these load balancing tools provide the flexibility and performance needed to meet today’s demanding traffic conditions.

Best Practices for Implementing Network Load Balancing Design for Failover

Load balancing is a crucial component of modern high-availability architecture. When implemented correctly, it ensures continuous operation even during component failures. Let us explore these best practices in more depth:

🔁 Deploy Redundant Load Balancers

Avoid using just one load balancer, as it creates a single point of failure. Instead, use either active-active (where all load balancers work simultaneously) or active-passive (a backup kicks in if the main one fails). This ensures high availability and uninterrupted service.

❤️ Use Health Checks

Set up health checks to monitor the condition of your backend servers. They help route traffic only to healthy servers, prevent downtime, and reduce overload. Deeper checks (like checking an API endpoint) provide a more accurate status than basic pings.

🔐 Enable SSL Termination

Let the load balancer handle encryption/decryption tasks. This saves CPU on your backend servers, simplifies certificate management, and ensures consistent security policies. It also makes it easier to support modern protocols like TLS 1.3 and HTTP/2.

🌍 Combine with CDN

Use a CDN (Content Delivery Network) to serve static files closer to users, reducing load on your main servers. It enhances performance, protects against DDoS attacks, and complements load balancers by managing different types of content intelligently.

📊 Log & Monitor Everything

Track key metrics like request rate, latency, and error rates to maintain visibility. Use tools like Grafana or Datadog to set alerts, monitor performance, and plan future capacity. Monitoring helps detect issues before they impact users.

⚙️ Auto-Scale Resources

Configure your system to automatically add or remove servers based on traffic. Predictable traffic spikes (like product launches) can be planned, while unexpected surges are handled in real-time. Use connection draining to safely remove busy servers.

Challenges of Implementing Network Load Balancing

Despite its benefits, implementing NLB comes with some complexities. So, what are the challenges of implementing network load balancing?

Configuration Complexity

Setting up load balancers is not always plug-and-play. Choosing the right algorithm (like round robin vs. least connections), configuring accurate health checks, and handling SSL termination all require technical expertise. Mistakes can lead to poor performance or even downtime.

Cost Overhead

While load balancing improves reliability and scalability, it often comes at a price. High-performance hardware load balancers or premium cloud services (like AWS ELB or GCP Load Balancing) can become expensive, especially for small to mid-sized businesses.

Security Risks

If not configured correctly, a load balancer might leak internal server IPs, making them vulnerable to direct attacks. It may also allow malicious traffic if proper firewall rules or access controls are not enforced. Regular audits and security best practices are crucial.

Vendor Lock-in

Using a cloud provider’s proprietary load balancing solution might tie you to that ecosystem. Migrating to another cloud or hybrid setup later can be complex and costly, as configurations may not be easily transferable or compatible across platforms.

The Future of Load Balancing: Trends to Watch

As digital infrastructure continues to evolve, so too will the role of network load balancing. It is no longer just about distributing traffic, it is about doing it smarter, faster, and more sustainably. Emerging technologies like AI, edge computing, and serverless architectures are reshaping how load balancing will function in the years ahead. Here are some key trends to keep an eye on:

🤖 AI-Powered Load Balancing

Artificial intelligence will begin to make real-time decisions about traffic routing based on usage patterns, predictive analytics, and even external data like weather or market trends. This means load balancers can adjust proactively rather than reactively, improving efficiency and reducing downtime during unexpected spikes.

🌍 Edge-Native Load Balancing

Instead of relying solely on centralized data centers, edge-native load balancing will allow edge servers to manage traffic locally. This will drastically reduce latency for applications that demand real-time performance, like augmented reality, virtual reality, or smart cities.

⚙️ Serverless Integration

As serverless computing grows, load balancers will adapt to distribute traffic across functions that spin up on demand. This dynamic routing means resources are only used when needed, offering both performance and cost advantages, especially for unpredictable or bursty workloads.

🌱 Sustainability Awareness

The future of load balancing will also consider environmental impact. By directing traffic to data centers running on renewable energy or operating in cooler climates, organizations can reduce their carbon footprints while still maintaining performance and availability.

Stay Ahead with Smart Load Balancing for 2025 And Beyond

In a world that runs on real-time interactions, personalized experiences, and global access, Network Load Balancing is essential. It is the unseen infrastructure layer that ensures uptime, responsiveness, and fault tolerance.

Whether you are a DevOps engineer scaling cloud apps, a CTO planning digital transformation, or a startup founder looking to deliver the best UX, mastering NLB gives you a crucial edge. Do not just scale — scale smart. Audit your existing infrastructure and consider whether your current NLB setup meets the standards of 2025 and beyond.

Did this blog help you? Let us know your thoughts! Subscribe to our blogs to get more blogs like this, and join our Facebook community to connect with fellow enthusiasts.