Have you ever struggled to keep your site dynamic and SEO-friendly with constant updates? When dealing with hundreds or thousands of pages, manually pushing updates to search engines becomes challenging. The key question is: How can you ensure frequent content updates positively impact SEO rankings? The solution lies in crawler bots. These bots scrape your sitemap, index new updates, and play a crucial role in enhancing SEO. In this blog, we compiled a web crawler list that will make your work easy and smooth.

What Is Web Crawler & How Does It Work?

A web crawler is an automated computer program designed for repetitive actions, particularly navigating and indexing documents online. Search engines like Google commonly use it to automate browsing and build an index of web content. The term ‘crawler’ is synonymous with ‘Bot’ or ‘Spider,’ and Googlebot is a well-known example.

Now the question comes, how do web crawlers work?

Web crawlers begin by downloading a website’s robot.txt file, which contains sitemaps listing URLs eligible for crawling. As they navigate pages, crawlers identify new URLs through hyperlinks and add them to a crawl queue for potential exploration later.

Different Types Of Web Crawlers: In A Nutshell

Creating a compilation of web crawler categories involves recognizing the three primary classifications: in-house web crawlers, commercial web crawlers, & open-source web crawlers. Let us get acquainted with these web crawlers before diving into the ultimate web crawler list.

In-house Web Crawler: These web crawler tools are created internally by organizations to navigate through their specific websites, serving diverse purposes such as generating sitemaps and scanning for broken links.

Commercial Web Crawler: Commercial web crawler tools are those accessible in the market for purchase and are typically developed by companies specializing in such software. Additionally, some prominent corporations might employ custom-designed spiders tailored to their unique website crawling requirements.

Open-source Web Crawler: Open-source crawlers, on the other hand, are available to the public under free/open licenses, allowing users to utilize and adapt them according to their preferences.

While they may lack certain advanced features present in their commercial counterparts, they present an opportunity for users to delve into the source code, gaining insights into the mechanics of web crawling.

A Compiled Web Crawler List: Most Common Ones In 2024

No single crawler is designed to handle the entire workload for every search engine. Instead, a diverse array of web crawlers exist to assess the content of your web pages, scanning them for the benefit of users across the globe and providing to the different requirements of various search engines. Now, let us delve into the web crawler lists that are in use today.

Googlebot

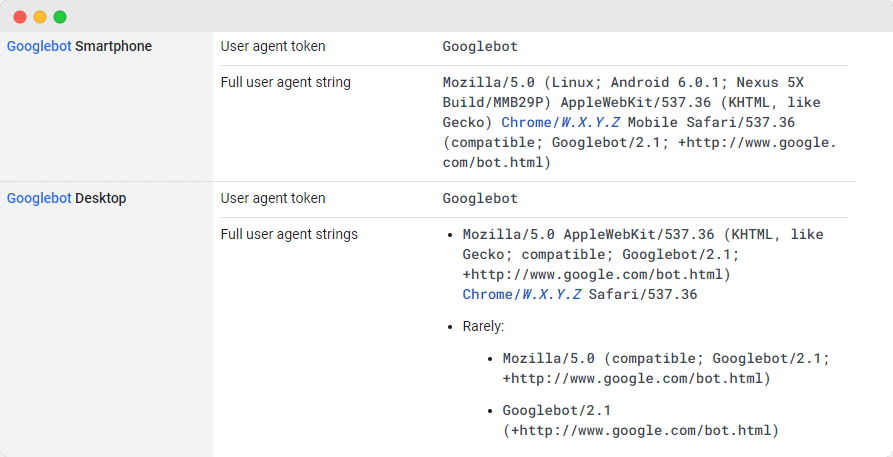

Googlebot, the generic web crawler tool from Google, plays a vital role in scanning websites for inclusion in the Google search engine. While there are technically two versions—Googlebot Desktop and Googlebot Smartphone (Mobile)—many experts treat them as a single crawler.

This unity is maintained through a shared unique product token (referred to as a user agent token) specified in each site’s robots.txt, with the user agent simply being ‘Googlebot.’

Googlebot routinely accesses your site, typically every few seconds, unless it is intentionally blocked in the site’s robots.txt. The scanned pages are stored in a centralized database known as Google Cache, allowing you to review historical versions of your site.

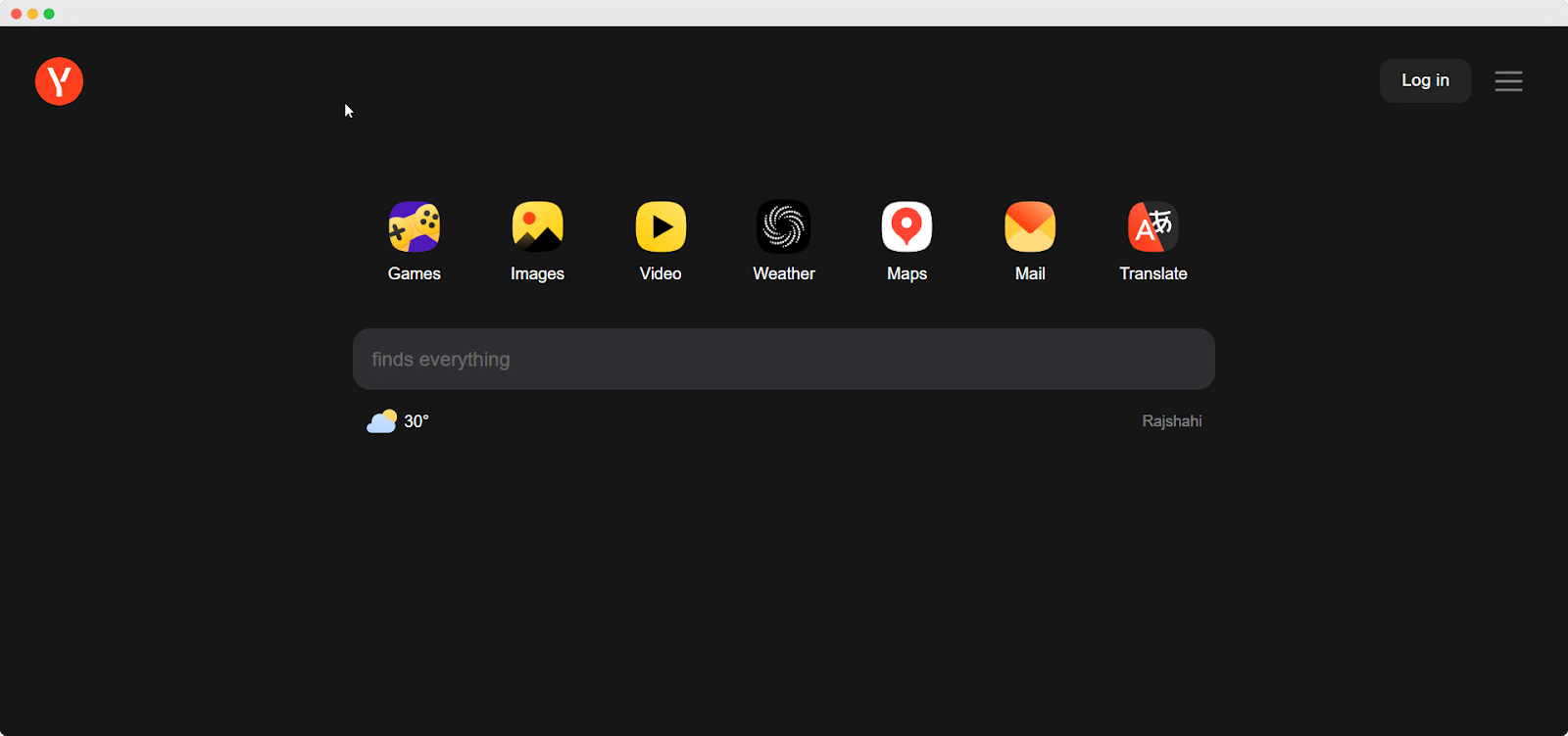

Yandex Bot

Yandex Spider is one of the best web crawler tools designed exclusively for the Russian search platform, Yandex, which stands as one of the major and widely used search engines in Russia. Website administrators have the option to grant access to Yandex Spider by configuring their site’s pages in the robots.txt file.

Furthermore, they can enhance accessibility by incorporating a Yandex.Metrica tag on selected pages, update page indexing through Yandex Webmaster tools or utilize the IndexNow protocol—an exclusive report identifying new, altered, or deactivated pages.

DuckDuck Bot

The DuckDuckBot functions as the search engine crawler for DuckDuckGo, ensuring privacy on your internet browser. Website owners can access the DuckDuckBot API to check if their site has been crawled.

During this process, the DuckDuckBot updates its API database with new IP addresses and user agents, aiding webmasters in detecting potential impostors or harmful bots attempting to connect with the DuckDuckBot.

Bingbot

In 2010, Microsoft developed Bingbot to analyze and catalog URLs, ensuring Bing delivers relevant and current search results. Similar to Googlebot, website owners can specify in their robots.txt whether they allow or disallow the ‘bingbot’ from scanning their site.

Furthermore, developers can differentiate between mobile-first indexing crawlers and desktop crawlers, as Bingbot has recently adopted a new agent type. This, combined with Bing Webmaster Tools, offers webmasters increased flexibility in presenting how their site is found and displayed in search results.

Apple Bot

Apple initiated the development of the Apple Bot to scan and catalog web pages for integration with Apple’s Siri and Spotlight Suggestions. The Apple Bot assesses various criteria to determine the content to prioritize in Siri and Spotlight Suggestions.

These criteria involve user interaction, the significance of search terms, the quantity and quality of links, signals based on location, and the overall design of web pages.

Sogou Spider

Sogou, a Chinese search engine, is recognized as the initial search platform indexing 10 billion Chinese pages. For those engaged in Chinese market activities, awareness of this widely used search engine crawler, the Sogou Spider, is essential. It abides by robot exclusion text and crawls delay settings.

Similar to the Baidu Spider, if your business does not target the Chinese market, we suggest you deactivate this spider to avoid sluggish website loading.

Baidu Spider

The primary search engine in China is Baidu, and its exclusive crawler is the Baidu Spider. Due to the absence of Google in China, it becomes crucial to allow the Baidu Spider to crawl your website if you aim to target the Chinese market. To recognize the Baidu Spider’s activity on your site, check for user agents like baiduspider, baiduspider-image, baiduspider-video, and others.

For those not engaged in Chinese business activities, it might be reasonable to block the Baidu Spider using your robots.txt script. By doing so, you can prevent the Baidu Spider from scanning your site, eliminating any possibility of your pages appearing on Baidu’s search engine results pages (SERPs).

Slurp Bot

Yahoo’s search robot, Slurp Bot, plays a crucial role in crawling and indexing pages not only for Yahoo.com but also for its affiliated platforms like Yahoo News, Yahoo Finance, and Yahoo Sports.

The absence of this crawl would lead to the absence of relevant site listings. The personalized web experience for users, featuring more pertinent results, is made possible by the contribution of indexed content.

Facebook External Hit

The Facebook Crawler, also referred to as Facebook External Hit, examines the HTML of a website or app shared on Facebook. It is responsible for creating a preview of shared links on the platform, displaying the title, description, and thumbnail image.

The crawl must occur promptly, as any delay may result in the custom snippet not being displayed when the content is shared on Facebook.

Swiftbot

A personalized search engine, Swiftype, enhances your website’s search functionality by integrating top-notch technology, algorithms, content ingestion framework, clients, and analytics tools. Particularly beneficial for websites with numerous pages, Swiftype provides a user-friendly interface to efficiently catalog and index all pages.

Playing a vital role in this process is Swiftbot, Swiftype’s web crawler. Notably, Swiftbot distinguishes itself by exclusively crawling sites based on customer requests, setting it apart from other bots.

Top Web Crawler List To Master SEO In 2024

The curated web crawler list presented in this blog serves as a valuable resource for streamlining the process of keeping your site dynamic and SEO-friendly. As you approach 2024, you need to incorporate these best web crawler tools into your strategy to ensure that your website remains at the forefront of search engine rankings, allowing teams to focus on creating quality content while the crawlers handle the intricacies of optimization.

If you found this article resourceful, then do share it with others. Also, do not forget to subscribe to our blog and find more insights like these to stand out on search ranking.